FireCheetah returns

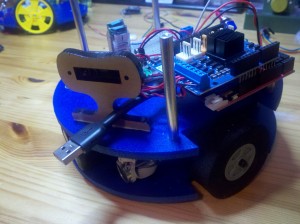

Last year I participated in the Abingdon firefighting robotics competition, in which a robot must navigate a maze, find a candle, and put it out. We entered two divisions: K-6 where kids are allowed to remote control the robot, and the senior division where all actions must be autonomous. The kids won their division, but I did not do so well in mine. Ever since then I have been thinking about a rematch. Now the time has come.

For the previous contest I only had two months to build a robot from scratch, order all the parts, learn the firefighting paradigm, figure out a wiring system, get a software environment set up, and ensure that the robot could perform in both autonomous and non-autonomous mode. Two months is not a long time for all of this, and after a lot of sleep deprivation I was proud to get something together that nearly worked. Now I have the luxury of more time, and only need to make improvements instead of starting from the beginning.

A better drivetrain

One of my biggest problems last year were the motors. I used Solarbotics GM8s with matching encoders, wheels, and mounting brackets. Although they were supposed to be a set, they never fit together very well. The encoder connectors were so frail I had to make my own. The motor did not fit square in the bracket (Solarbotics suggests drilling out the case a bit…rather annoying for a custom part…also didn’t work perfectly). The wheels were too thin to navigate the carpet. The motors were extremely sensitive, even a short stall rendered them unreliable for hours.

So I decided to start with a high quality motor, then try several wheel options connected to the shaft with a hub. At the competition it was suggested that I use a Faulhaber motor and I found these on Goldmine for only $12 a pair. It has a high quality encoder built in and integrated with the main motor connector.

These are right-angle output gear motors that come with a gear attached to the output shaft. My hex keys were unable to remove it, and the one that I special ordered also failed. I finally used my Dremel to carefully cut into the set screw and get that gear off. In one case I unfortunately nicked the output shaft, rendering that motor incapable of fitting tightly with the wheel hub, but I had spares and the others were successful.

I bought a number of different wheel systems to test but have started with the Lynxmotion hub. This has a set screw to keep it on the output shaft. I learned that most motor output shafts are not perfectly round, but D-shaped, and it is very important to put the hub set screw on that flat area, otherwise it won’t grip properly and the wheel spin will not be true. For wheels, again there are many options. I am starting with a neoprene set that is thicker and slightly smaller than the ones from last year.

Finally, I had to figure out had to attach the motors to the platform. With the right-angle gearbox in place I cannot access any of the screw holes built into the motor. After some failed experiments I was able to use a technique from The Robot Builder’s Bonanza. I drilled some holes in a plastic strap and screwed it down snugly over the circular motors. Normal screws did only a so-so job, thus I ordered longer screws from McMaster Carr — a great hardware resource as their shipping charges are modest and they get items to you fast.

The big honking battery in the middle works very well but is also heavy and probably more voltage than I need. I have some much smaller 7.4V LiPos to try.

Early results are very promising. Here is a video of the robot doing circles on my office rug. With the Solarbotics setup it was never able to move here. And this is without any casters installed — the platform is dragging on the carpet!

Using the Arduino Eclipse plugin

Aside from the motors, software bugs were the other large problem. Indeed, a last-minute error there killed FireCheetah’s navigation in the competition. Navigating to the right software file to make changes was also extremely time consuming. The default Arduino IDE is designed to have everything in one file. I had 20 files, not including the Robot Operating System code.

On my previous laptop I had Microsoft Visual Studio and after the competition last year tested out the Visual Micro Arduino plugin with some success. If you have Visual Studio, I’d try that first. However, my new laptop does not have Visual Studio and I no longer qualify for the academic version. It seemed rather wasteful to buy Visual Studio for this one project. The best-known open source alternative is Eclipse, so I thought I would give it a try.

There are some rather involved and outdated instructions on integrating Eclipse with WinAVR on the Arduino site. There is also an Arduino eclipse plug-in. It was pretty clear that this wouldn’t be as polished as Visual Micro but I figured it was worth a shot.

Installing the Arduino Eclipse plug-in is not for the faint of heart. A couple of tips if you decide to try it:

- Put Eclipse in a directory with no spaces — NOT Program Files.

- Put the Arduino environment in a directory with no spaces too. Confirm that you can use the Arduino IDE to compile and upload a simple program.

- Eclipse suggests a workspace directory. ACCEPT IT. If you go elsewhere, Windows 7 in particular may not let you write there and this causes all sorts of problems. This is especially true as the first time you use a particular board combination the plug-in will try to create a separate project for that board so that you can link to its static library.

- If you use an Arduino library, you must Import it, not just add a reference to it.

- The plugin instructs you to set certain Arduino files to be indexed up front. This is not possible in the latest version of Eclipse. Therefore functions like Serial will all be highlighted in red as “undefined” even though the build may work. To fix this problem, index manually and/or add the path to Arduino.h manually.

- The very first time you create an Arduino sketch, you must have the board plugged in, as otherwise you won’t have a COM port to choose from and the configuration cannot be completed.

- If you have to start over and re-install Eclipse — which I had to do about 5 times, not knowing everything here — you must completely delete Eclipse, the workspace directory, and any .eclipse or Eclipse directory in the Documents or Users area.

Painful as this was, the results were worth it. Eclipse is quite a nice IDE, and to be able to see the first lines of function code simply by moving my mouse over a reference to it, navigating to an object’s source file with one click, and finding all uses of a a particular variable or function, are tremendous productivity enhancements. The build process also found a serious bug right away (don’t ignore those warnings!). I have successfully compiled and built code to the robot with this. The only key tool I haven’t tried yet is the serial monitor, because the plugin website does not have written instructions on that. After downloading a 32M video file, it appears you pull it up via Window -> Views -> Other -> Arduino -> Serial monitor. I do prefer written instructions, but hey, this is free software.

Easier physical connection to the Arduino board

To update the robot’s software, it’s necessary to connect a USB cable from the Arduino to the computer. The Arduino sits under the motor shield and behind an IR sensor and quite a few wires. Snaking the USB cable in was a bit of a pain. At Abingdon someone suggested using a very short USB cable left connected to the Arduino, then using a USB extender as needed to connect that to the computer. This works nicely.

USB short cable plugged into the Arduino. When the robot is fully assembled this platform is underneath another and harder to reach.

Another hassle was the XBee. We need this to be connected for teleoperation and for Serial debugging when the robot is running disconnected from the computer. However, to upload new Arduino code it is necessary to unplug the XBee from the Arduino or the upload won’t complete. I intend to put in a switch, instead of pulling the wires each time.

Simpler circuits

Instead of using my home-built high-side switch to turn on the fan, I will use the ULN2803s I ordered when writing my post on adding power and pins to the Arduino. It’s conceptually simpler, and safer (my other version did not have kickback diodes). I’m not sure why I didn’t do this before, I simply didn’t remember it. That’s sleep deprivation in action.

I never built a sound activation circuit last year, but I had ordered individual components and started wiring them to a breadboard — quite a few components, and messy. Since then I’ve found a simpler audio circuit that uses an LM567, which I now have and will use instead.

It is nice to be able to work at a more relaxed pace and hopefully do more things right this time.

Repairing my own laptop

This was a short but sweet project — very empowering.

For years I have owned a Dell Precision M4300. It is not a sexy computer by today’s standards, but it has a very large screen, comfortable keyboard, runs fast, and has all the ports I need. Therefore, no reason to replace it. I figured at some point something would die on it — as it has for all my previous laptops — and then I’d upgrade. But this guy has kept on ticking.

A few weeks ago, the laptop finally developed a problem. The lid would no longer stay propped up at the correct angle. It either fell all the way back, or closed. Not a comfortable way to work! I could prop the lid up with pillows but clearly this was going to need to be fixed. I thought about upgrading now, but it seemed a little wasteful to do it for what was clearly a mechanical issue. Also, I am pretty lazy. This is my development computer for work and as such has quite a lot of customized programs installed on it, the kind that don’t come with Windows Installers. Even with a migration utility it would be a real hassle to set everything up on a new machine, plus I’d have to get a new operating system and no one had anything good to say about Windows 8. (Yes, I need Windows for work…I do have a USB drive with Linux that plugs in also.) I also have an old, matching docking station which would need to be swapped out too.

So next option, repair. I have no objection to paying for skilled labor, but losing the machine for a couple of weeks wasn’t appealing either. I decided to do a little research. After Googling around I found quite a few YouTube videos about laptop lid issues. These were not directly applicable — my hinges were completely shot so tightening elsewhere would not help — but one anonymous genius suggested using the service manual to learn how to open up your laptop. And indeed, Dell has a very nice service manual for the Precision M4300 which is freely available. Opening up a laptop is not for the faint of heart but suddenly this seemed quite manageable.

There is a thriving market for Dell parts and I was able to find replacement hinges for $20 a pair. I propped up the lid for the next few days until they arrived, then I got to work. There were lots of little screws to be taken out, a few places where some force was needed (not too much), and where necessary I took photos to make sure I would be able to reassemble things. I had to go to the point where the LED screen was almost removable from the lid, but eventually got to the hinge:

I replaced the hinge, put everything back together, and held my breath while the computer rebooted. Success! The laptop was fine — with a nice steady screen angle. Total time to tear down / reassemble was well under an hour.

I am very impressed with Dell for making the service manuals so accessible. The fact that Dell is so well known made it easy to find replacement parts, also. This will certainly be an incentive to keep buying from them in the future.

Free Coursera online course: Heterogeneous Parallel Programming

I’ve posted before about Stanford and Udacity free online courses. Tomorrow I am going to be starting another one, offered by Coursera. The topic is Heterogeneous Parallel Programming. From the course description:

All computing systems, from mobile to supercomputers, are becoming heterogeneous parallel computers using both multi-core CPUs and many-thread GPUs for higher power efficiency and computation throughput. While the computing community is racing to build tools and libraries to ease the use of these heterogeneous parallel computing systems, effective and confident use of these systems will always require knowledge about the low-level programming interfaces in these systems. This course is designed for students in all disciplines to learn the essence of these programming interfaces (CUDA/OpenCL, OpenMP, and MPI) and how they should orchestrate the use of these interfaces to achieve application goals.

Putting this in plain English, the graphics engine sitting on your computer’s graphics card is extremely good at processing large amounts of parallel data, and quickly. We’ve all seen the increase in realism with computer graphics. It turns out that these techniques can be used elsewhere, for example, with high-volume scientific data. The catch is that you have use a radically different programming paradigm, otherwise you get no performance benefit. I brushed up against this in a computer graphics course but we had little time to go in depth. Hopefully this will be a chance to gain a deeper understanding.

It will also be good to get back into some maker projects. The firefighting robot burned me out a bit, frankly. Of all the challenges I’ve undertaken in the past decade, it was the most demanding both physically and mentally (yes, it took more of a physical toll than Mountains of Misery; lack of sleep will do that). A good deal of creative energy has gone into learning the art of cooking, which culminated in a successful Thanksgiving. At some point I will post about all of that, as I’ve managed to do some interesting things beyond the sous-vide experiments. For now I am looking forward to this programming course, and an upcoming Nova Labs series that will ultimately grant me access to their new laser cutter.

Robot competition day

After two months of work, the big day was finally here. Friday night we drove up to Philadelphia with lots of tools and the robot nestled in a box at my feet. The kids were very excited and we all brought matching robot t-shirts to wear. Saturday morning we showed up bright and early for the Abington firefighting robot competition. I was not sure what to expect and wanting to be absolutely certain of getting a spot with power and a place to test the robot. As it turned out, the organizers had some very nice lab classrooms for us and I didn’t need my power strip and long extension cord. I tested all the sensors on the robot and everything seemed in order.

According to the website there were 54 robots registered for the competition. There were a large number of Vex robots and even more Lego NXT robots. Only a handful of robots were non-kit ones like mine. Most of the Vex robots were part of a local club. I had some very good conversations with the guys who run the club. They were very interested in my bot because of its use of expanded rigid PVC and the Arduino platform, a switch that they are considering. It turns out that Vex robots are extremely expensive. They showed me one of their entries, which had a fairly large metal frame and large wheels, along with a number of plug in sensors, I am guessing about 5 in total. They told me the cost of the bot was $850! In contrast I had encoders, far more sensors, and wireless communication, at a parts cost under $150, and — had I had the time — the ability to add more things like line sensors for less than a dollar. Now it is certainly true that I spent more than $850 on this project as part of equipping my workshop with loads of parts “for the next project”, the power saw, and replacing Parts That Burned Out as I learned how to use my power supply correctly. (The list of fallen includes a motor, two encoders, a switching voltage regulator, and an XBee with breakout board.) Still, that is quite a price difference.

When I made it over to the practice arena, there were some bad surprises in store. Most of the maze floor was hardwood; well and good, as that is what I had at home. They also had carpet. I knew this was coming, but had expected relatively thin carpet. Not true. This was thick, and worse, it was uneven in height with patterns. On my first practice run, the robot stopped dead. Since I was not running at top speed, I changed the code to boost the speed greatly (knowing that I might pay a price in accuracy in the hardwood portion of the maze).

I knew that narrow wheels on that carpet was not going to work well. They would get stuck in the grooves. As luck would have it, that rug was on the path to the single room I had chosen. And so, while I had wanted to avoid any last-minute code changes, I decided to go for another room instead, where there was no carpet. The first thing that happened after that was the robot started running backwards continuously, saying that it was stalled. Turns out I had overwritten an array, which had nothing to do with the stall code, but this was another example of the sensitivity of the system to any coding error. Fixed that, but lost more time.

Another weird thing, when I would start my practice runs, the robot would instantly swerve left into the wall. Since this was practice, I could simply hit the reset button and then it ran fine. This happened on both practice runs. Not a good feeling.

The competition started with the high school students. Another surprise, as I saw with a sinking heart that there were now two carpets, and no way to avoid them. We watched as 11 robots in a row failed to put out the candle. Robot 12 succeeded…then there was another string of failures. That robot was the only one in the division to make it, and later in the day it was not able to do it again in the second round.

It was surprising how many robots made it into the room but were unable to put out the candle. Some of them were thrown off by candle reflections on the white walls — I had uncovered this potential problem in testing at home. The biggest problem, though, is that so many of them avoided using a powerful fan. You get a 25% time reduction factor for using non-air methods. So there were lots of balloons and a few sponges. Only problem is, if you aren’t exactly in the right position, those methods don’t work well. Some folks used fans, but wimpy ones; I am guessing those are the ones that worked most easily with the kits. By contrast, I had a fan designed for providing thrust on airplanes and a dedicated servo to sweep it rapidly back and forth. It was loud as hell but if I got anywhere near the candle, it was going out.

The interesting thing is that there was a much easier way to get a time reduction — 20% — by having your robot start with tone activation instead of a button press. This was a no-brainer for score improvement, and I had bought all the parts (a few dollars) but didn’t have time to build it. Yet almost none of the contestants used it. I realized that this is probably because the kits don’t offer a plug-in version of a tone detector.

Next was the junior division. They did not have the carpets to deal with, and so, there were a decent number of successes. About halfway through this, I found out that Laurel and Holly’s division had been moved up so that they were now going to be next, not the original schedule. I ran back to reprogram the robot for remote control, plug in the laptop XBee and haul everything back. All in all, quite a bit of running around.

It was worth it though, because the kids did a great job of driving the robot and putting out the candle, even though we’d had zero time to practice. Here is the video:

Then first run for the senior division. Once again, I put my robot in and wham — straight into the left wall. I later figured out that this was a software bug introduced the morning before, when I hastily added the required button switch. That put a delay in the code, and I put it in the wrong place, so the sensors were calibrating while outside the maze.

At the time, though, I had no idea of what was going on. So with dark thoughts about my motors I quickly put in a edit that if the robot hit a wall, it would back up and then turn right.

Surprisingly enough, this worked decently for the second run and the robot at least made it down the hallway heading straight. But, then it hit the dreaded carpet, and a wheel got stuck in the grooves. And that was that!

Only one robot in the senior division successfully completed two runs (I think). A man named Paul B. had brought a beautiful pair of robots in for a swarm demonstration. Two robots working in coordination searched each room for the candle and put it out, choreographing their moves, and returning home to nestle back together at the start. Needless to say they were also well capable of putting out the candle acting alone. They moved wonderfully smoothly, with no carpet issues.

After the show I talked with Paul a bit. The first thing I asked about were the motors, and these were indeed high-grade medical motors that cost over $100 each new. (Mine were $8!) Like me he had encoders, and interestingly enough they were about the same counts per revolution. Since his were integrated into the motors, it was much cleaner. I had 10 wires going into my breadboard from my encoders, and while they never got loose, it was ugly. His robots didn’t seem to have any wires anywhere, though there must have been some. Lovely PCB boards with surface mount components made for a spare, elegant design.

Although I would have liked to do better, we had a great time at the event. The kids got two very nice trophies, and I met a lot of cool people, too many to mention here. The organizers were friendly, not to mention that we got free food, and the entire event was free! I’m very glad I came here instead of Trinity.

Goals for next year:

- Have more time to get everything done and tested!

- Use different wheels. Those were my biggest limitation. Small, wider, and better traction.

- The wheels were part of the motor/encoder package. Since I was not at all thrilled with the motors, I will upgrade to a higher-quality pair with integrated encoders.

- Even with good motors, relying on a robot to go straight is never robust design. I will spend much more time building in recovery behavior. With all the sensors I have it is possible to choose a reasonable course of action. Most robots in the show hit a wall at some point and then they were doomed.

- My battery was a little big and heavy. Might change it. On the other hand I have to say it performed well.

- Designing PCB boards would be fun, though of course this assumes you have a fixed plan for that component!

I’m happy to say I’ve crossed off a big item on the list already, namely moving to a real development environment for the Arduino instead of the toy one that comes with it. Turns out Visual Studio has an Arduino plug-in that works great and is effortless to install.

And now, time to catch up on real life.

Finishing the firefighting robot

As I write, we are on our way to the Abington Firefighting Competition. Up until last night, I was not certain that we would make it. In the previous post I mentioned various gremlins with the motor control system. Running at a lower voltage more or less made them go away. It worked so well that I could no longer use current surges for stall detection, because they weren’t large enough. I did add the heatsink too when it arrived. Nevertheless, there were enough little problems that it was hard to get an unbroken block of time to debug. And I had many new features to add, including the fire extinguisher.

I am very proud of my fire sensor. It is based on the Rockhopper design, but since there were no schematics I had to figure a few things out. I found that using four photodiodes instead of one did increase sensitivity, and gave a sharper angular peak — very desirable if oriented properly. As usual, there was some trial and error. I discovered that the photodiode responds to the frequencies emitted at the base of the candle flame. Thus the tea candles that I originally tested with were a disaster, because when they burned for a little while the interior hollowed out and covered that part of the flame. The diodes are also sensitive to candle height, so I spread them out to cover the maximum allowable range in the competition, 2 inches.

Figuring out how to mount everything on increasingly limited space was a challenge as well. Ultimately the flame sensor went on a butter container, with the fan mounted on the side. All of these were then attached to a servo, which sits in a rectangular opening cut nicely by my new power saw. Again, all very time consuming.

So by yesterday I realized that I would not have time to get the navigation reliable for the entire maze. And therefore, I have lowered my sights a bit. Abington offers a “single room mode”, where you can pick ahead of time the place you would like the candle to be. As long as your robot can make it to that one room, then, you are in good shape. There is a 3-minute penalty added to your time, which means that you are not going to win unless everyone else crashes (or chooses the same option). I was disappointed to have to do this, but I suspect I’m not the only one. Looking on YouTube, there are a suspiciously high number of videos with robots finding the candle in the first room they look at, and it’s the easiest room to get to.

I have had a number of surprises working on this project. Everything felt very rushed, as I had only two months to work and no experience. I had to make design compromises. These included using an Arduino Mega, motor shield, and breadboards, as opposed to wiring everything individually. One surprise was how well this worked. I thought there would be issues with wires popping out of the breadboard or falling out of the Arduino headers. As it turned out, they worked extremely well. I cut the wires precisely and used the proper housings and…it just worked. What did not work well were the screw terminals. I had many problems with the battery leads coming out and the same with the motor leads. Since screw terminals are supposed to be more reliable, this was an unpleasant discovery.

The Arduino works with C or C++. After years of working with Java and its automatic memory management, I knew going back to C++ would be painful. It was worse than expected. If you write a buggy program on a computer, you usually get a nice error message. On a microcontroller, your only clue may be when things suddenly to act weird. And in the worst case, you can completely lose the ability to communicate with the board and get rid of the faulty code. At one point I thought a coding error had bricked an expensive board. Finally I found a suggestion of sticking a large capacitor between the ground and reset pins to prevent automatic resets, and that eventually worked. Quite a scare though. Flawed code is quite easy to write, with no warnings about dereferenced pointers or default values. I learned to put in a few seconds delay at the beginning of the program to allow some time for communication before any errors surfaced. That was not 100% foolproof, though, as some global objects get constructed first. So I had to make the constructors dead simple and call separate setup functions on the objects later. Even then, sometimes, problems.

I did get to appreciate how much work the Arduino folks did to make a microcontroller usable by “normal” people. They are hard to work with. The trade-off is that you lose power; for example, the board runs at a much slower clock speed than needed, because to change that would mean rewriting older code. And the Arduino IDE is hardly suitable for a large code base (which I eventually had), with no line numbers and certainly no way to find function usages, etc. There was no time to configure another IDE this round, but next time, that will happen.

This was one of the hardest things I have ever done. The intellectual challenges were constant, with no time to delay or rest. It was harder than my big challenge last year, Mountains of Misery; as hard as it was, that was only ten hours with the last one being truly hard. This required intense drive for weeks, and there were many times I was tempted to throw in the towel.

This morning I tuned the candle-finding algorithm, put in the required red button start switch, and added two joystick functions the kids will need for their remote control portion: turning the fan on/off and swiveling the servo. The Robot Operating System has done very well for the remote control part, it was fun to work with.

Here is a video of FireCheetah navigating the maze and putting out a candle. I got it working even better by the afternoon, but this should give a good idea. Let’s hope he does as well tomorrow!

FireCheetah navigates on his own

With less than a week to go before the robot competition, I have been working very hard. Most of the previous week was spent on navigation. With this particular maze design, there is a section where the robot is forced to move and do some turns without having a wall to guide it. I wanted to master this, knowing that it would be the hardest part. After a great deal of work (and stress) I figured out how to get the robot to go through reliably. Here is a video of the robot in action:

Along the way, I ran into two problems that nearly drove me crazy and cost a week of time:

- The robot would drive straight at times, but other times not. And when it wasn’t going mostly straight, navigation failed.

- Even when the robot went straight, it overshot the target distance. Now after some experimentation, I was able to compensate for this with various fudge factors in the software. However, these factors change on different surfaces and the Abington maze has a variety of them.

The first problem was by far the worst, as it was very frustrating to see a well-behaved machine suddenly turn into something that could not move an inch without listing severely to one side. Not always the same side! One step I took to help was to add more sensors, the two side sonar on the left. These helped with initial wall alignment and reduced the element of chance. Still, when the robot was in bad shape, nothing could help.

Eventually I figured out that the robot generally started its day working well, then degraded at a certain point. The first thing I looked at was the battery. But that wasn’t it. I found that leaving the robot alone for a few hours would allow it to recover, even without charging the battery. (The LiPo battery I’m using is a champion, and well worth the money. It never seems to hiccup or run out of juice. A world of difference from standard alkaline.)

Then I thought about what could be stressing the robot. It is well known that when the motors stall — under power but unable to move, probably because your robot hit a wall — the current surges. In theory, my motor controller was rated for a much higher current than the stall current for my motors. But these events can generate a lot of power = a lot of heat = potential thermal shutdown by the L298 chip on the motor controller board. So I took the robot when it was fresh and running straight, then deliberately stalled the motors. Sure enough, that killed any straight motion for several hours.

I added checks in the software to detect a stall and to cut power to the motors if one is detected. It’s probably a good idea to have the robot back off and/or reposition itself too. Simply protecting the motors gave a big improvement. I’ve also concluded that there is no need for me to run the bot at 12V. I have far more torque than I need and have only been doing 70% of top speed. If I lower the voltage to 9V, the power drain caused by a stall is only 60% of what it is at 12V. I will have to experiment and see if lowering the voltage causes any problems. Finally, I’ve ordered a heatsink to prevent the chip from overheating. Many experienced roboticists recommend using one, if nothing else it extends the life of your chip. It was a bit of a challenge to find a heat sink for a surface mount component.

Next, the travel overshoot. On my hardwood floor I could predict it fairly well, but that wasn’t good enough for general use. It finally occurred to me, there should be a way to actively brake the motors. Up to this point I was simply applying voltage to the motors, and at a higher duty cycle they moved faster. To stop, I sent no voltage. But the shaft could continue to spin for a while. After doing some research, I discovered that the L298 has a built-in brake that activates by setting both direction pins HIGH.

Wonderful news, but my lousy motor controller did not allow direct access to those pins. I had already noted that it also grounded the current sense pins which are an easy way to detect a stall. I had purchased it for its economical use of Arduino pins…bad idea. Time to replace it with the Arduino Motor Shield, conveniently available at local Radio Shacks (for once I did not have to pay exorbitant shipping to get a needed part quickly). The Arduino Motor Shields gives both current sensing and dynamic braking! And if you don’t need them, you can easily cut the jumpers and free up the pins. $10 more than the SparkFun product but a better design. It also uses the L298P, a more advanced version of the L298 chip that has better thermal resistance.

Navigation has consumed more time than I would have liked, but I have done some work on the fire detection as well. Most of that will be covered in another post. I did want to show off the new toy I bought to cut a hole for the detection servo:

This saw can be used for straight and mitre cuts, and as a scroll saw. I purchased the attachment to cut circles as well. I’ve been wanting a saw for a very long time, but was intimidated at the thought of buying one. The ones I used in the woodworking club seemed far too big for a home. The Amazon reviews were very helpful; and sure enough, this one proved to be extremely easy to use. It opens up many possibilities for new projects.

FireCheetah is running

Good news — FireCheetah’s remote control mode is working! Here is a video of the bot in action:

The robot is actually quite fast. I had to slow it down to 30% of full speed to control it reliably by hand. The weight is unevenly distributed right now as well, which adds to the challenge. I have heard that victory in robot combat is often determined by the skill of the driver, and I believe it. Driving well takes practice. Fortunately, this part of the event is not timed; the kids can take their time getting the robot to where it needs to be.

As planned, for remote control I am using ROS on a PC talking to the Arduino on the robot. The ROS portion was surprisingly easy. Where I did have difficulty were the limits of the microcontroller. One painful discovery is that should your program crash, you won’t know it has happened, and very weird things can happen. (However, you may see the reset button flash on the micro as it reboots.) A keep-alive signal of some kind is a good idea. I chased some long rabbit holes before realizing that a program crash was the issue. The crashing, in turn, was through attempting to do some pin manipulation in class constructors, before the microcontroller was ready. This cost me a few days, very expensive at this point.

I am changing the architecture for the autonomous mode. I won’t be using ROS. The bandwidth limitations became obvious even in remote-control mode, my sensor suite is limited, and the robot needs to move fast. More significantly, I did some research on previous winners of the Trinity firefighting competition. Wall following is a proven technique, and can be done in an elegant way.

There is a lot of work to be done in the next few weeks. I need to mount the sensors, and build the circuits for the fan and candle detection.

Firefighting robot update

The firefighting robot competition is only a few weeks away, and I’ve been working steadily. Because of the time pressure, there hasn’t been much leisure to blog about it! I’ve accomplished the following tasks, and may write about them later. Partial list includes:

- Set up wireless communication between the Arduino-controlled robot and a PC running the Robot Operating System (ROS), using XBees and the rosserial library.

- Made a robot descriptor file, and learned how to publish the basic frame and joint transforms to visualize the robot in ROS’s rviz.

- Created a foamboard maze for testing. I didn’t want a huge maze taking up floor space in my house, and the contest arena is 8′ by 8′. To my surprise the web was useless on this, so I had to design it from scratch. My maze is light, portable, and easily taken apart or reconfigured. These foam connectors are the key; it took time to track them down.

- Learned how to use wheel encoders, and debug them properly. Getting it right required using my cool palm-sized oscilloscope. Added debouncing code to improve accuracy.

- Learned enough about the ROS navigation stack to send fake odometry messages and see the robot move in rviz.

- Converted the contest arena schematic into an 3D obstacle map for ROS.

- Wrote the basic differential motor controller for the Arduino to move the wheels, collect odometry information, and correct the velocity based on a PID system. Much of this was built from scraps of code acquired various places online. It is not tested yet.

- Got a Logitech F310 game controller, installed the ROS joystick driver stack, and “drove” a simulated robot. This works via ROS velocity commands, identical to what the real robot will need. The joystick stack was not compatible with my version of ROS and it took some hair-pulling to fix. After managing it, I went in and added the details to the official ROS documentation so that others won’t suffer. The kids have enjoyed driving the fake robot around.

- Figured out a nice system of crimp connectors and matching housing to wire the robot up.

- Purchased and received the necessary parts for the robot construction, including chassis, wheels, motors, motor brackets, encoders, standoffs, and ultrasonic sensors.

Upcoming tasks include figuring out the full navigation and localization stacks for ROS, which seems rather daunting at the moment. I also need to convert the ultrasonic sonar data into something that ROS can use, it is designed for laser scans or Kinects. With that, I will have a full framework for a robot capable of autonomous navigation.

The only major area not looked at yet in depth is the candle itself. Once in the proper room, we need to detect the candle and put it out. It seems that this will not be too hard, so I am leaving it for the end.

Looking at all this, I can see I will never write about all of it. But for those topics that are not well covered elsewhere online, I will give it a shot.

How to configure an XBee on Linux, Mac, or any other operating system

Consider this post a corrective to all the over-complicated advice I’ve seen out there.

If you own an XBee, you likely already know that the official configuration tool, X-CTU, only runs on Windows. As result, if you need to configure an XBee on a different operating system, a Google search brings up repeated suggestions to install a Windows emulator, install X-CTU, then manually update some drivers.

Now this is fine if you already have or want an emulator but really…there is no need for such a heavy-weight solution. You can fully configure your XBee via the serial port and a terminal program.

Windows comes with Hyperterminal pre-installed, but for Linux I downloaded the free minicom program. (Mac…you are on your own.) Before starting, you need to know the port the XBee is attached to and the communication settings, just as you would with X-CTU. For a factory-fresh XBee, the communication settings will be:

- baud rate: 9600

- data bits: 8

- parity: None

- stop bits: 1

- hardware flow control: None

To find the port the XBee is using, follow the directions for your operating system: for Linux udevadm, or on Windows it is Device Manager.

For Linux minicom, I recommend the terminal options of turning on local echo and adding linefeeds.

Once your terminal program is up and running, open the connection to your XBee port. Type the string +++ quickly without pressing the Enter key. You should get back a reply of “OK” from the XBee. If it doesn’t work, wait 10 seconds and try again. If it fails repeatedly, you may be at the wrong communication settings. Usually it is a matter of mismatched baud rate. But if you’ve totally forgotten the XBee settings, you can use this simple method to do a hardware XBee factory reset.

After you’ve gotten the OK, type

AT

You should get another OK back. +++ and AT put the XBee into command mode. This is a temporary state! For the next few seconds any information sent to the serial port will be used to command the XBee, and the XBee will not forward it on to any listeners.

The most common change is that of baud rate. Type

ATBD

The XBee will reply back with 3, which equates to a baud rate of 9600. Let’s say that we want to increase this to 57600. We type in

ATBD 6

and get back OK. To write the change to the XBee’s memory, type

ATWR

At this point, the XBee will be communicating at 57600 baud, so you must change the communication settings in your terminal program in order to continue.

If you ever have to pause and figure out what you are doing, just type +++ again to put the XBee back into command mode.

Another common change is PAN ID. XBees ship with a default PAN ID and you will want to avoid crosstalk with other people’s networks. The command for this is ATID. As before, issuing a plain ATID tells you the current value (probably 1332) and sending a command like ATID 1414 will change the PAN ID to 1414.

There is a full list of XBee configuration commands in the XBee User’s Guide, Chapter 10.

In writing this post I learned about the moltosenso IRON, a free cross-platform alternative to X-CTU. I have not tried it, but it might be useful for those who need to do more complicated configurations.

Building a fire-fighting robot

I’ve been looking for a reason to build another robot. A few weeks ago I found a list of robot competitions around the world. Ignoring the battle bot options, geography and capability led to me to the Penn State Abington Fire-Fighting Robot Contest:

The objective of the fire-fighting robot contest is to design a computer-controlled robot to navigate a maze (8 ft. by 8 ft.) that consists of 4 rooms. Rooms are surrounded by walls except for a 18″ entrance. A single candle is randomly placed in one of the 4 rooms. The goal is for the mobile robot to explore the maze, locate the candle, and extinguish the candle in the minimum time. Robots must be within 12″ of candle before extinguishing candle. The layout and dimensions of the maze and rooms are fully known to all contestants prior to the contest. For the advanced divisions, the hallways and room may be covered with carpeting, and there is a small staircase located within the maze. Bonuses are earned for returning to the start position after extinguishing the candle, and allowing obstacles to be placed in the rooms. Participants are permitted to use any combination of building materials and computer technology. All robots must operate autonomously except for the K-5th grade remote control division.

This is not a simple task, but I was particularly excited to see the K-5th grade remote control division. This allows us to participate as a family; my kids can drive the robot to the candle and extinguish it, while my entry must be autonomous. Robots cannot be larger than 12.25″ in any dimension.

At this point, I’ve figured out the basic design. I believe the most difficult task will be navigation, especially as the robot will have limited sensor capability. The robot may start from a known position in the maze, and I will take this option. I would like to use existing software; many brilliant people have worked on this problem. A few months ago I took a great course on the Robot Operating System (ROS), taught at HacDC by Andrew Harris.* ROS has the firepower to do the job, but there is a steep learning curve, it must run on a full PC, and I will have to use Ubuntu and Python, two environments I’m not familiar with. In doing the course assignments, figuring out Ubuntu and Python slowed me up more than anything else. However, I already have a working setup from the class, and with Google all things are possible. So my plan is as follows:

I will run ROS on my laptop. The robot will have an Arduino microcontroller as its brain. The laptop and the robot will communicate wirelessly using a pair of XBees. ROS has a package, rosserial_arduino, which allows the Arduino to run little ROS nodes that can send sensor and odometry data over the serial port to the ROS nodes on the laptop. The laptop nodes will do the heavy computation and reply back with navigation directives. The XBees make the serial port communication wireless.

Although the ROS site has some good tutorials, setting up the XBees on Ubuntu to run the demo Arduino programs was not obvious. Their rosserial_xbee package is designed for a mesh rather than point to point, and the two modes are not compatible. And the XBee configuration tool is Windows only. I got it working — if I have time, I will write up a post on how to do all of this — and was quite impressed with the performance of the XBees. I walked outside my house for a few hundred feet and was still able to communicate with the XBee in the upstairs office. The big risk with this design is that if the wireless communication fails, the robot is dead in the water. My XBee series 1 802.15.4s have a range of 90m and operate at 2.4 GHz, so they should work well. I did of course change the default PAN ID to avoid interference from any other competitors with the same device.

One nice thing about the wireless design, the kids can use the same robot for their entry. I will just hook up a joystick to the PC and it will send commands via ROS. Even if my autonomous design fails, and I give it a 50% chance given the total novelty of all this, theirs should have a better chance.

I purchased 10 ultrasonic sensors (HRC SR04) from China for the price of 1 PING))) — $3 each on eBay, free shipping, and they arrived quickly. Currently waiting for the chassis, gearmotors, wheels, and encoders to arrive. I opted for a mostly circular ABS chassis that is 7″ in diameter. The wheel encoders were more expensive than anything else, so it will be interesting to see how well they work. I already have Arduinos and servo motors from other projects.

In the meantime, there is a lot of software work to be done. The ROS navigation stack is not at all simple to understand, nor are the simulation tools. But I am making progress. I already have a simple robot model URDF file, and understand how to translate frames of reference using tf. I should be able load a 2D map of the maze using a properly dimensioned image, so the next step is to generate that.

The contest is April 21st — not far away!

*The HacDC ROS class wiki is publicly available and is complete enough to learn from on your own.